Survey says ... guessing is for losers!

You'll be winning at the game of good governing if your listening toolkit includes statistically valid surveys

A picture is worth a thousand words, they say. So let me share four that I think encapsulate today’s subject.

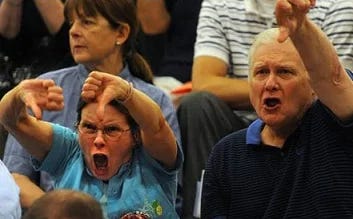

First, a picture of what you may face at a public meeting.

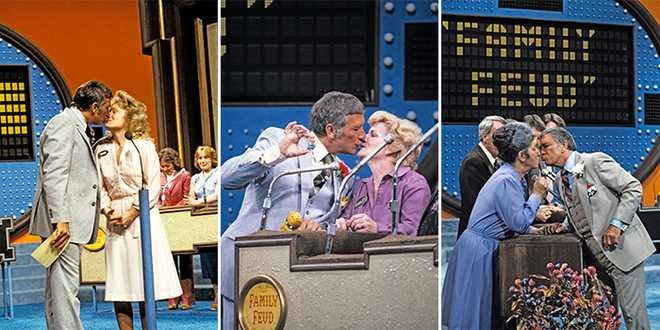

Now, three pictures of man working with survey data.

Which looks more rewarding to you?

I too would much rather be in the polished, tasseled loafers of Richard Dawson of Family Feud renown, who famously kissed female contestants before they attempted to guess the correct answers from surveys.

Which is a really roundabout way of saying (replete with a late 1970s pop culture reference) that there’s so much to love about surveys and so much to be wary of when it comes to public meetings and social media engagement. (Still, it took me way less than 1,000 words to get there.)

The single most important listening tool I had in my career as a government communicator was a statistically valid survey. Because it answered the following question better than any other:

What do people think about what we’re doing?

This isn’t to say face to face listening and/or engaging on social media aren’t important. They are. Very much so. Which is why the next two posts in this GGF series on Listening will focus on how to do that right.

It’s a Good Government Truism that if we’re going to govern well, we need to know — really know — what the general public thinks. Not just the handful who bark at elected officials during citizen communication or troll your Facebook posts.

Both in-person and online citizen engagement has notable limitations, the most significant of which is that they rarely — if ever — reflect broad public sentiment. I think this is something most folks working in local government understand. If you’re hosting a public meeting about a controversial project, the folks most likely to turn out are the ones most passionately opposed to it. Totally understandable. But if those are the only people you’re getting input from and you want to be responsive to your citizens then you’re going to make some bad decisions.

Here’s some insight in just how terribly unrepresentative in-person and online input is from Kevin Lyons of FlashVote. Kevin’s company conducts statistically valid surveys for local governments on hot topic projects. Here’s what his research showed.

Public input sentiment was the opposite of what the whole community wanted about 70% of the time.

Gulp.

So how does Kevin know this? Easy. He looked at data from their first 300 surveys and compared it to what his customers were hearing at public meetings, social media channels, emails and other engagement. Hence the FlashVote tagline, “Serve the many, not just the noisy few.”

Amen and amen.

So why don’t more governments do statistically valid surveys? The easy answer is cost. As Kevin told me, it’s getting harder and harder to reach a representative sample of residents as most folks now have unlisted cell phone numbers. It’s cheap to put a Survey Monkey poll online and think you’re getting good input. You are not — and that’s not to say you should never use something like Survey Monkey. It’s an engagement tool that can be helpful, but it will never get you true representative input because people self-select to take it.

“Engagement with online tools is a way to hear from people with interest in a particular topic,” Kevin said. “It’s a way to get input, and it's good if you want to hear what tennis players think about the tennis courts. Scientific surveys get input from people without a special interest in a topic. That's hard and costs money.”

Good salesman that he is, Kevin is quick to add that while it’s not cheap to get good data, it’s way cheaper than making a bad decision. In fact, he’s quantified the cost savings of bad decisions avoided by FlashVote customers. It’s currently at $400 million-plus and rising.

Here’s an example Kevin gives for a well-intentioned project that looked like it had broad community support. His hometown in Nevada was proposing new garbage cans with wheels. They would make life easier for the folks doing the pickup, thus increasing efficiency and holding down costs. What’s not to love, right? All the online and anecdotal input received was positive. Still, they decided to do a statistically valid survey just to make sure a majority of residents were on board with the change. (As anybody who’s dealt with solid waste collection changes knows, folks typically HATE changes to that service. It’s almost as fraught as changes to the animal control ordinance.)

So they hired FlashVote and conducted a legit survey and Oh. My. God. was their previous input, uh, garbage. A solid 63 percent of residents were opposed to garbage cans with wheels. Why? Most folks in town had steep driveways and some didn’t have garages to store the new cans in. Whereas all the recommending board members had flat driveways and garages.

“They just missed it,” Kevin said. “It was well intentioned, but they just missed it. Me too. I have a flat driveway and a garage, so I was blind to the broader problem until that survey.”

You’d think in a town named Incline Village they wouldn’t have missed it, but who hasn’t had a myopic moment? By double checking on public support, the city saved $7 million by not making the change.

Tools like FlashVote are great for decision support on specific projects. I highly recommend them. I’m an even bigger fan of regularly conducting statistically valid customer surveys of your citizens on a broad range of issues. You do a lot in local government. Are you doing it well? There’s no one better to ask than your citizens.

Comprehensive surveys

Let me start this section by saying, if you’re going do a full-spectrum satisfaction survey, then commit to it for the long run. Don’t just do one. It’d be a helpful snapshot, no doubt. But the superior value — and I say this as someone who managed the City of Round Rock’s surveys for 20 years — is collecting trend data for a deeper analysis of how well (or not) your government is functioning in the eyes of your residents.

Because it’s a fact that a lot of the most impactful projects we undertake in local government — building roads, parks, libraries and utility systems — take years to plan and complete. And if you’re working strategically — and it’s a Good Government Truism that high performing cities do just that — then it’s gonna take time not just for the projects you undertake to get built, it’s gonna take time for the public to notice the difference they make. As the public servant, you live (and die) with the progress (or lack thereof) you’re making on projects every day. The public does not — unless you’re rebuilding a major arterial road they take every day (that’s where the dying comes in).

Sometimes you make a change that doesn’t take years to implement, and you’ll get quick feedback on how citizens cotton to the new policy or program. Speaking of solid waste services, here’s how opinions changed in the year after Round Rock added curbside recycling.

Going from 47 percent approval to 84 percent approval is considered almost statistically significant. Kidding! That’s a monster change! We had tested opinions on adding curbside recycling in the previous survey and found there was definitely an appetite for it — as long as it didn’t cost too much. You can bet the City Council was happy with the survey results, because I can assure you, they got plenty of emails from the aforementioned group of folks who HATE any change to solid waste services.

Trend data is particularly helpful for those of us in the communications business. In Round Rock, we always asked the question, “Where do you get information about the City?” We then listed a dozen or so outlets — like the local newspaper, the metro daily, local radio, TV news, the city’s utility bill newsletter, etc. Not surprisingly, the answers to that question changed significantly over the years, giving us actionable insight into where to best allocate our limited resources. For example, as newspaper readership began to decline, we stopped advertising in them and began spending those dollars in digital spaces.

For a fun look at how much the media landscape has changed, here’s a chart showing the results in 1998 compared to 2022.

I hasten to add there were other choices in 2022, such a Facebook, Nextdoor, homeowner association, etc. As this transition was occurring, we made the choice to spend less time on media relations and more time on telling our own story.

It’s important to understand what a statistically significant change from survey to survey is. Generally, it’s any change outside of the margin of error. So if your margin of error is 4.5 percent and satisfaction goes up or down by more than 4.5 percent, that’s a change you should be paying attention to.

There’s no need to do a big survey every year. Every two years is just fine. And, frankly, they really aren’t that expensive in the grand scheme of things. If you’ve got a budget in the tens or hundreds of millions of dollars, it’s my humble and correct opinion you can afford $20K or so every couple of years to hire an outside firm to conduct the survey. Unless, of course, you don’t want to know what folks really think. If that’s you, how the hell did you wind up reading Good Government Files?

Those biennial surveys need to be statistically valid, obviously. That means you’ll need enough responses to get within a 4 to 5 percent margin of error, and the demographic makeup of respondents should closely align with your community’s latest U.S. Census data for race/ethnicity, gender and age. It used to be vendors could get a large enough sample by a making a poo-poo ton of phone calls. Those days are long gone. Now, it’ll take mailing the survey, followed up by a poo-poo ton of phone calls to encourage folks to fill it out and mail it back or to take the survey online.

Like I said, we did them every two years in Round Rock and the insights gained — and, perhaps more importantly, the City Council direction confirmed — were priceless. I swear, I can’t tell you how many years the City Council began to prioritize an issue and on the next survey that issue would pop up as an emerging need. Obviously, we had councilmembers who were plugged in to the community and listening.

Here are a few more pointers from Kevin on survey questions.

5-point scale type questions are good for measuring something, making a change, and then measuring again.

If you’re asking questions on issues like curbside recycling or wheels on trash containers, you need to ask questions that give you actionable data you can use to make decisions.

Ask questions with a price tag. Don’t just ask folks if they want more pickleball courts. Of course they do. The better question is, what are you willing to pay in tax rate or fee increases for those pickleball courts? Never ask about benefits without costs, and don’t ask about costs without listing benefits.

Good surveys should tell you not just how citizens rate your levels of service, but what services are most important to them. The kind of importance-satisfaction analysis that follows lets you know if you’re investing in the specific areas residents believe are most important. If you are, then citizens will tend to give you higher scores on your overall delivery of city services.

This leads me to the final feature I highly recommend when choosing a company to conduct your survey. You want someone who can benchmark your results with other cities in your state or region as well as nationally. It will make your results much more meaningful. I recall in our early days of surveying in Round Rock, we hired a local public affairs firm do the work for us. I specifically recall the company representative presenting the results to the city council and noting that satisfaction with communications was around 60 percent. I more specifically recall him noting that 60 percent number should be significantly higher. What was he basing that statement on, I wondered. He never said. There was nothing to compare it to. (I have since suspected he was fishing for consulting work to “help” me.) Once we hired ETC Institute, which does benchmarking, I learned that our communications ratings — which had steadily risen to the high 60s as our efforts got more dialed in because we used what we learned from the survey — were actually stellar when compared to state and national averages. By 2022, the communications satisfaction rating had risen to 72 percent, a whopping 34 points higher than the national average. (Of course, I’m not bitter about that mealy-mouthed consultant who threw shade on my hard work in front of God, the City Council and a rapt audience on our government access channel. What gave you that idea?)

It wasn’t just the comms shop that felt better about the survey results once the benchmarking started. Recall an earlier GGF on budgeting communications that started when the firefighter union threatened a referendum that, if approved, had the potential to put the city in dire straits financially. I’m sure the union had looked at the survey and seen satisfaction rates in the high 80s for the Fire Department. That was before we got benchmark data. When that happened, we learned that ratings that high were actually just average for fire departments. I still recall the chagrin of then City Manager Jim Nuse when he looked at the FD’s benchmark number.

When you’re listening and responding by working to address issues cited by citizens, it makes them happy. That’s why Round Rock gets such great results in its surveys. And doing that year after year, decade after decade, means your peer cities get to eat your statistically significant dust. We know that because ETC Institute, which has conducted the last eight surveys for the city, a couple of years ago started giving out a “Leading the Way” award for outstanding achievement in the delivery of services to residents. (ETC has surveyed more than 2 million in 1,000 cities since 2010.) Round Rock has earned the award for its last two surveys by ranking in the top 10 percent among communities with a population of 100,000 or more regarding its performance in the three core areas assessed on ETC’s DirectionFinder Survey:

Satisfaction with overall quality of services

Satisfaction with customer service provided by employees

Satisfaction with the value residents think they receive for local taxes and fees

It’s gotten to the point where the presentation of survey results has been a real feel-good moment for the City. And why not? The praise is coming directly from the people we serve.

If you’re not doing surveys and just looking at the cesspool of chatter that happens on platforms like Nextdoor, it’s easy to think that’s what everyone is thinking about your work. You need to regularly remind yourself — and especially your elected leaders — that not everyone in town is on Facebook.

“You think you know what's going on out there, but you just don't,” Kevin says. “You can always be surprised."

And in a good way, if you’ve been using the right listening tools.

Wow again, Will.

I learned soooo much from you here!

I am going to apply your ideas in my own work.

“Serve the many, not just the noisy few.”

And make sure you institute

ONGOING high quality surveys

to find out what the many are thinking,

and how their thinking is changing over time.

I hear you.

You are teaching us principles for success

in building our democratic society.